On November 30 2021 Microsoft announced the public preview of the Azure Load Testing service, a successor to the deprecated Visual Studio Team Services/Azure DevOps Load Testing tool. I caught wind of this via Twitter while in the hotel bar that night with my European Cloud Summit session on Scaling Websites using Azure, so I did what any perfectly normal person masochist would and rolled the dice on building a demo that night.

All I needed to do was build a simple website, create the necessary Azure resources, deploy the code, build a JMeter script and then try the service. No problem.

For the website I decided to use the classic TODO app backed by Cosmos DB from the tutorial provided by Microsoft. But modified to use Managed Identity, because good practices are good.

In short order I had a working website deployed to Azure.

After loading a few TODOs into the app I built a simple JMeter script to load the list page and then a random detail page.

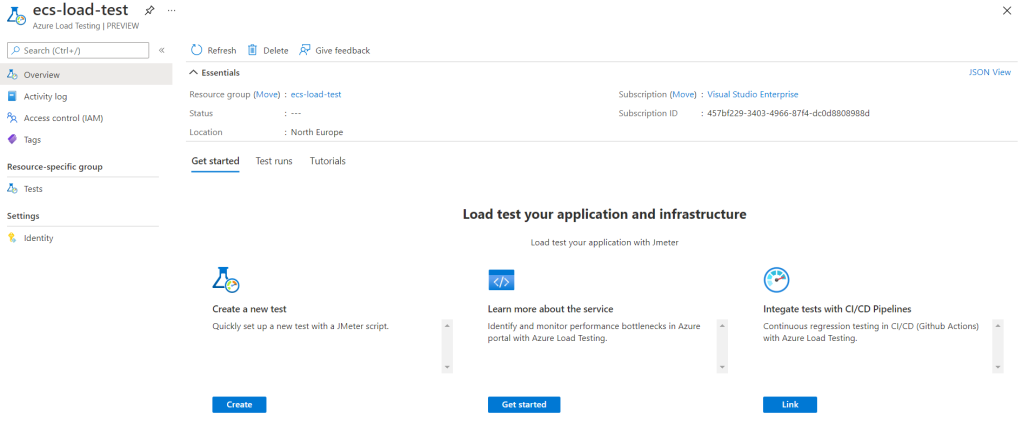

With a provisioned Load Testing service I was up and running with a working demo inside of a couple of hours.

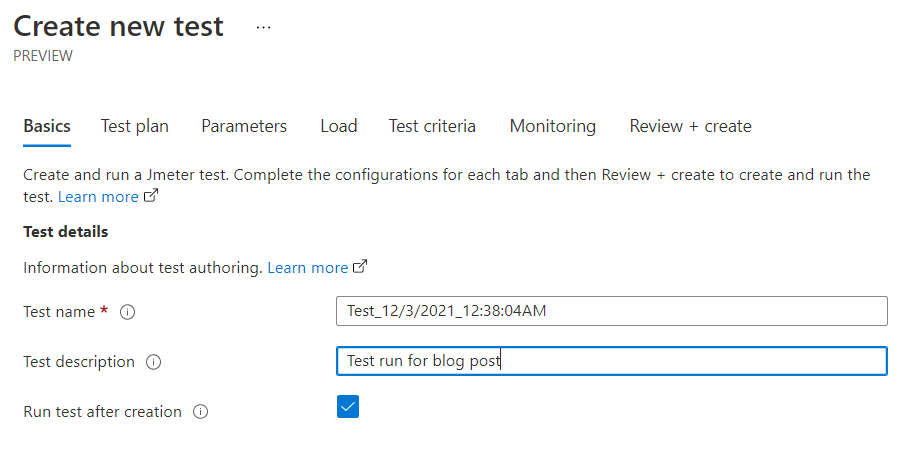

Creating a test run is easy:

Give your run a name, description, and choose if you want it to run after creation

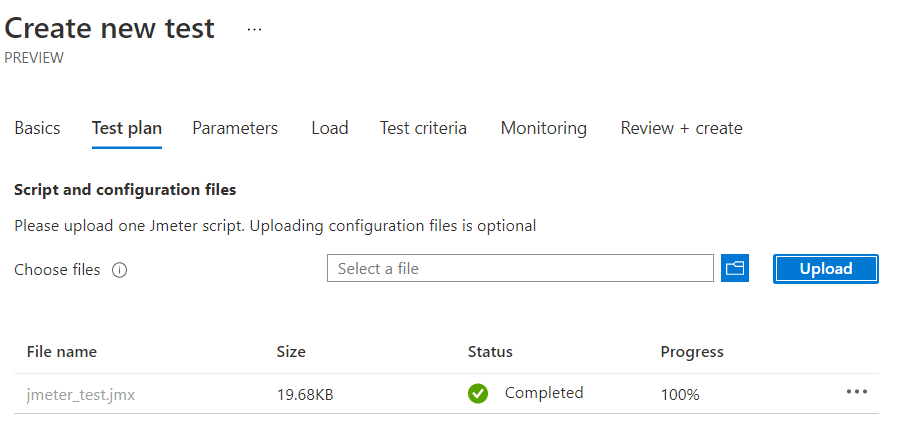

Upload your JMeter script and optional configuration files:

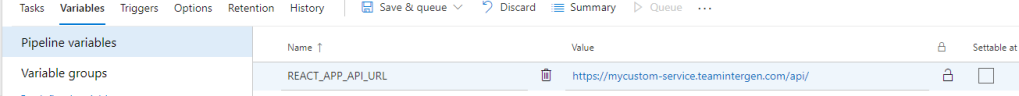

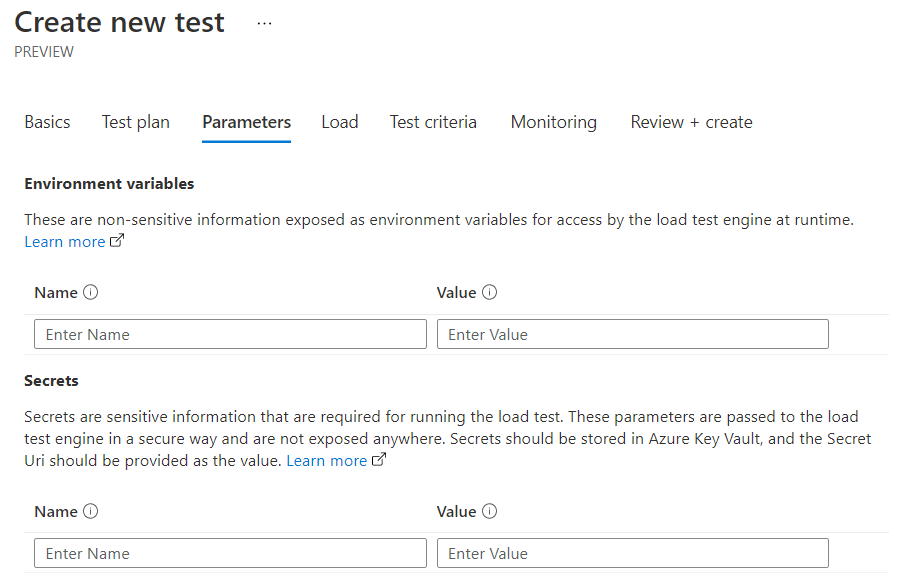

Set any environmental variables and secrets needed for the test run, in my case I didn’t need any

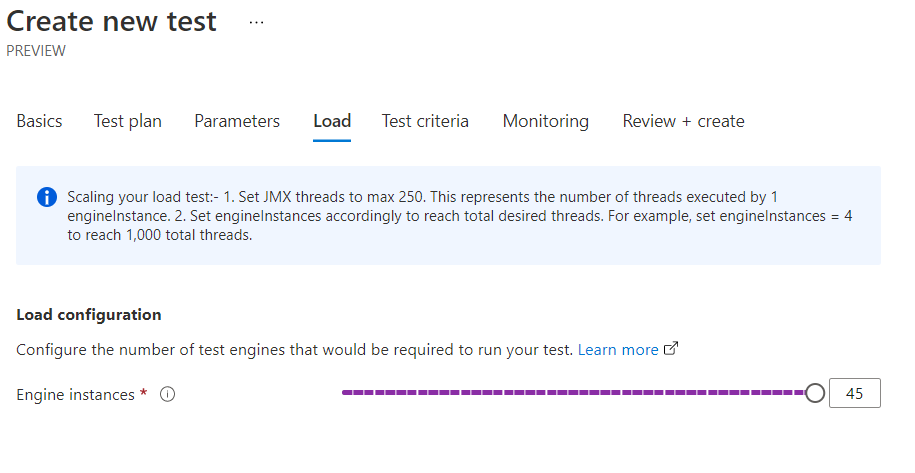

Choose how much load to apply, the help text here is really nice that it calls out that you tests should set the maximum number of thread to a maximum of 250. With the ability to use up to 45 engines this caps the current capacity at 458,250 threads. My bet is that’s going to be plenty for most organizations to to load testing.

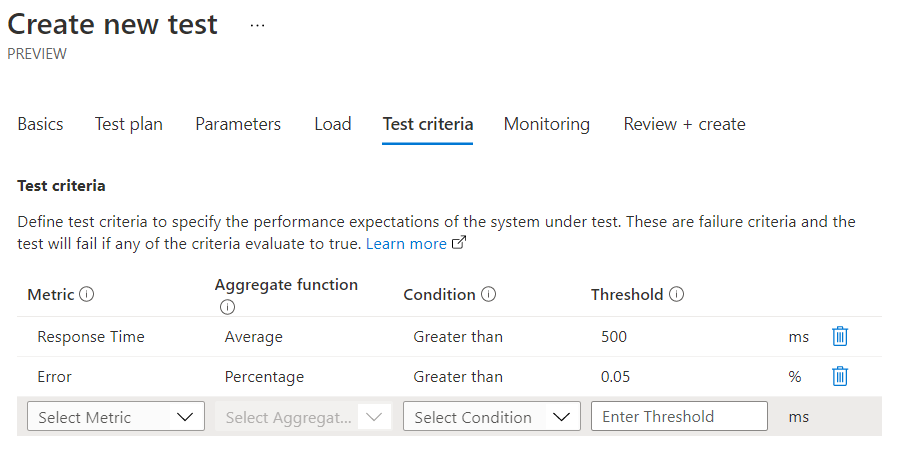

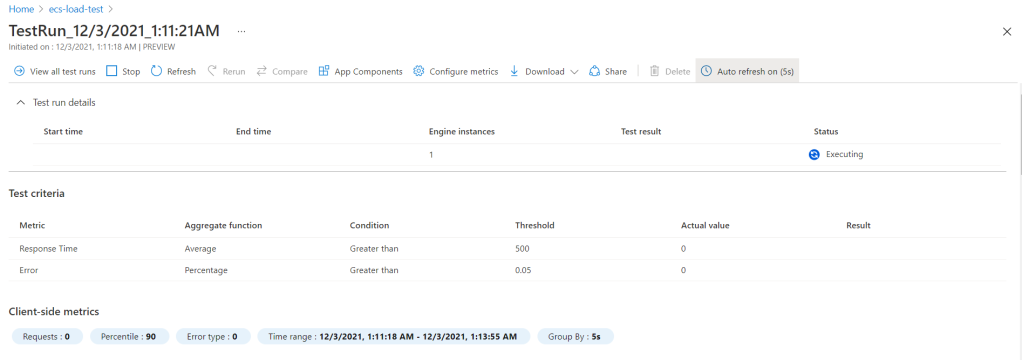

Next up you can configure test criteria. This is an area where I’d like to see some improvements. At present the only options are Average Response time and Percentage of errors. At an absolute minimum I feel like this should provide Percentile options for the response time so that a test can be failed on the 90th percentile of response time exceeding a threshold.

It’s worth noting that given this approach there’s no filtering to only measure the critical requests, so test tuning might need some careful attention when using these criteria as an acceptance measure.

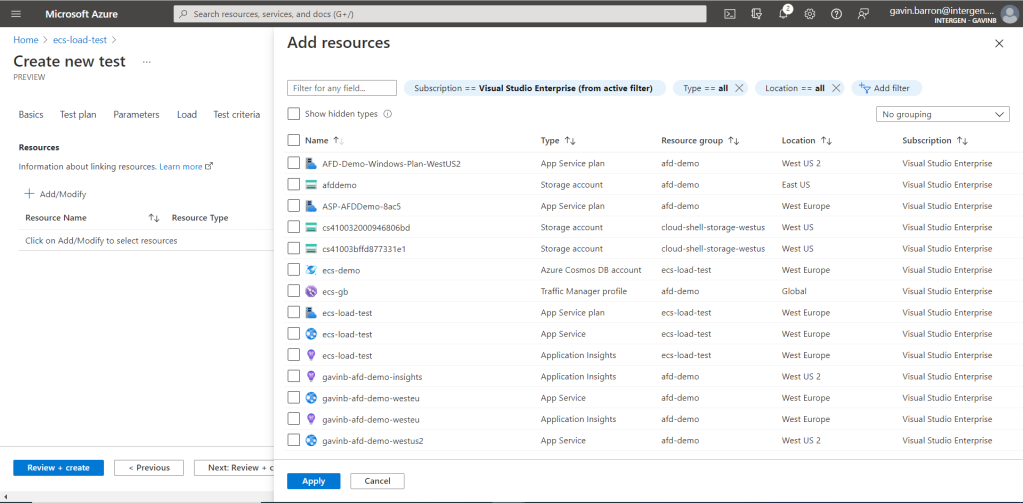

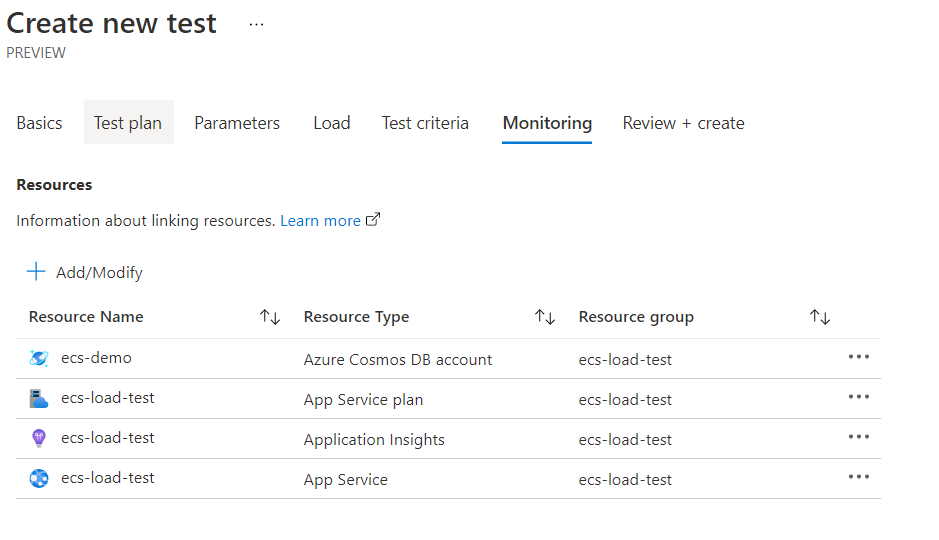

Then you can select the Azure resources which should be monitored. The default here is to show all the resources in the subscription for selection but adding a resource group filter helps to narrow the search nicely. It might be nice to open this dialog with the resource filter pane open and awaiting input as this view might be slow to populate in subscriptions with a lot of resources to choose from.

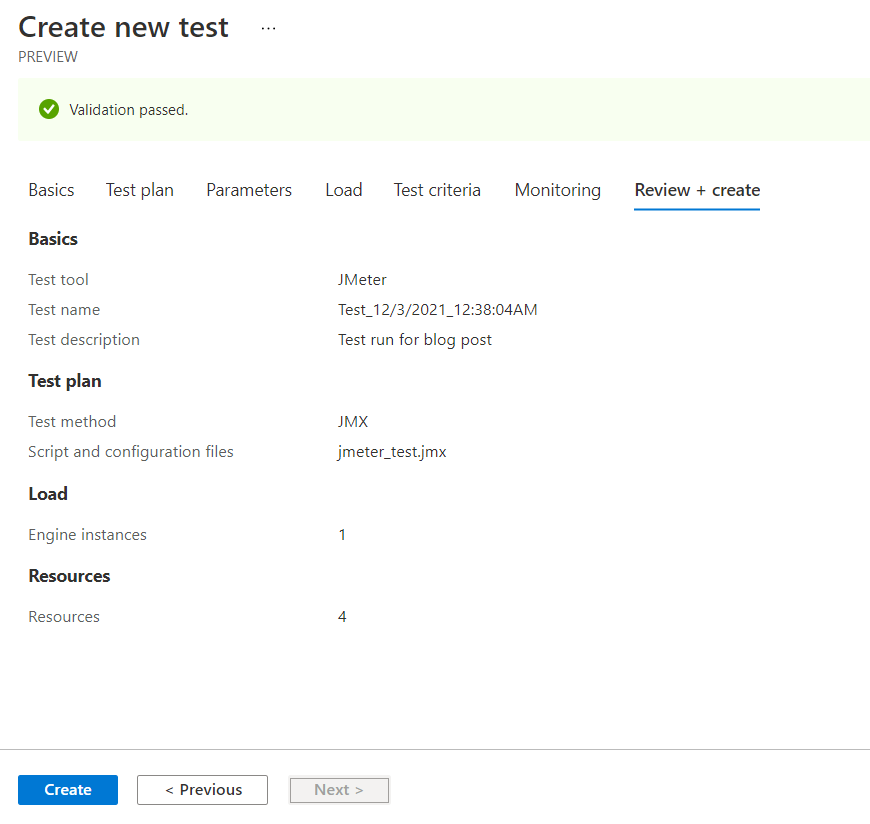

Finally you can review the settings and create your test run

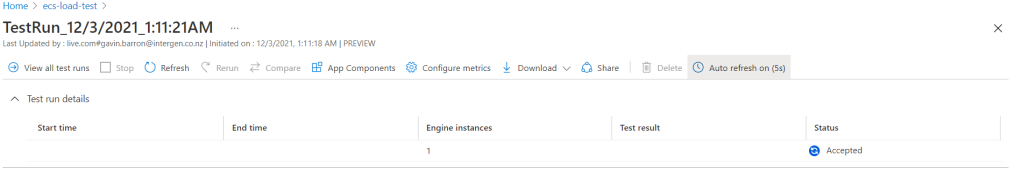

After clicking create the test run moves through a number of states as the service does its work, starting with Accepted

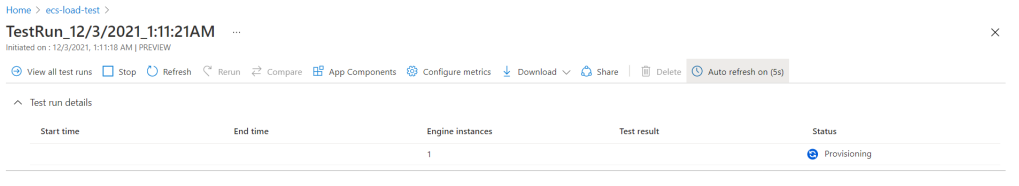

The service then starts provisioning the necessary infrastructure to run the tests.

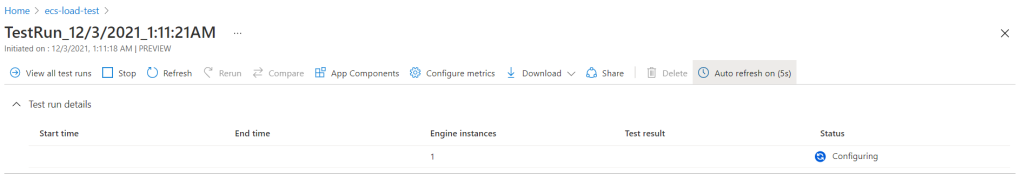

Applies the necessary configuration to the run the test.

And then starts running the test in the Executing state

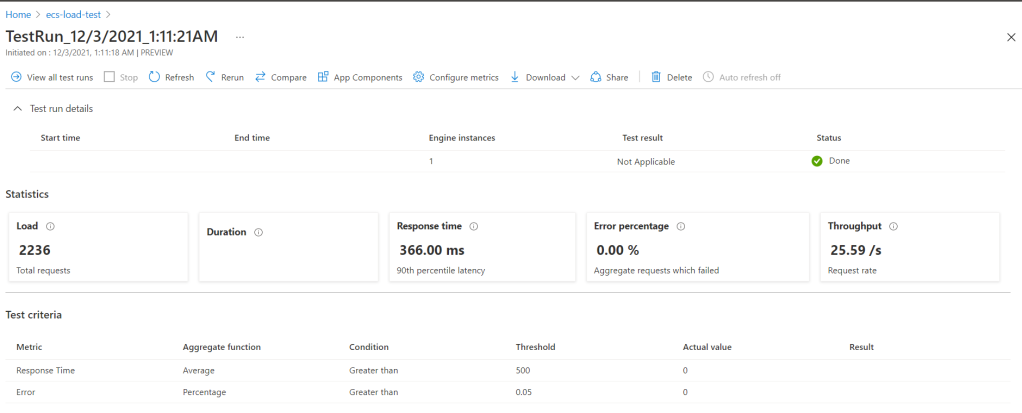

The tests finish and the run is Done.

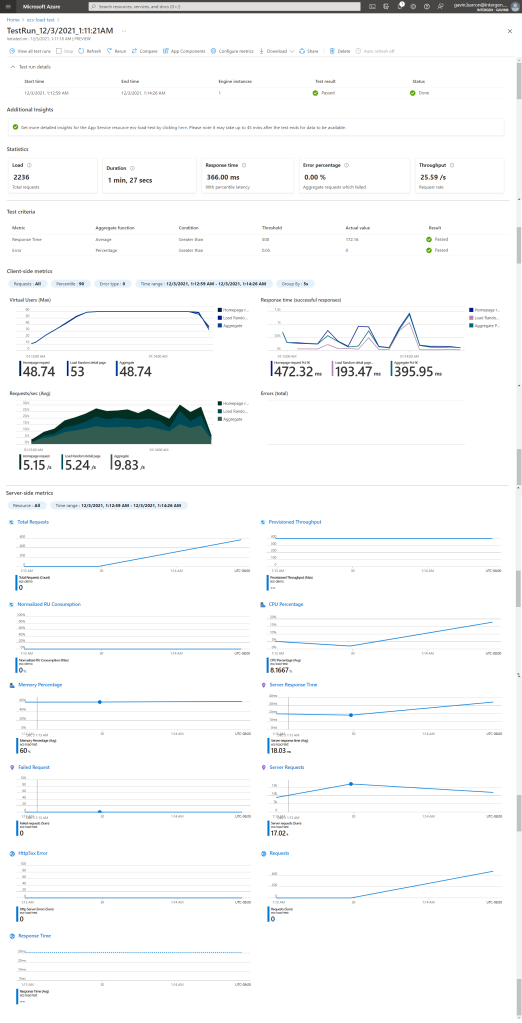

Don’t be fooled though, the service now needs some time to aggregate the metrics from JMeter and the telemetry from the Azure resources which were being monitored. Here it might be nice to show some feedback that this work is happening as the current view looks, well, broken, because none of the graphs are populated. After a while the graphs are populated and you get a view like this:

These graphs also contain a link to the App Service Diagnostics tool as I had added an App Service into the list of resources being monitored which is nice. Unfortunately the time filter applied when linking is a whole day rather than being a time range centered on the test run. Zooming to the data that is relevant here would be a smart move in my opinion.

In the command bar at the top of the Test run report there are some useful tools

- Rerun

- Repeat this test run, with the same JMeter test but using any configuration changes applied to the test run, and of course any changes to the deployed code or Azure resources being monitored

- Compare

- Allows basic comparison between repeated runs of the same Test run. Yeah, that’s as confusing as it sounds, but I assume this is to ensure that the things being compared are reasonably similar as it is possible to have a new test run in the same service run a completely different JMeter test against another set of Azure resources.

- App Components

- Add or remove Azure resources being monitored during the test run, this is really handy as I definitely skipped past the step to set up the resources being monitored in the creation wizard

- Configure metrics

- Add or remove reporting metrices to show in the page, for example your might want to add a metric on Http Status 4xx

- Download

- Download the JMeter generated output, this is really useful to see more fine grained data on what the JMeter client observed during testing

So, about that demo during my session…

I knew I was taking a risk on this one, and the demo gods were cruel this time.

I was able to show my attendees the creation and set up of a test run, but unfortunately the service decided that it would not work for me and got stuck in the provisioning state for a few hours before failing 😦

Thankfully I was able to pivot an show the results from a test run done earlier while preparing the demo.

On the whole I think that this service shows a lot of potential, I’m looking forward to experimenting more with this tool and trying to integrate it into some CI/CD pipelines next week when I’m back in the office.